Upshifting My Assumptions

Racing Heart has already been mentioned if you’ve ventured over to the creative section of this site. If not, TL;DR: Forum game using the sim Automobilista to run races and serve as a platform to write lore and invent characters.

Part of the enjoyment of Racing Heart for me is building spreadsheets and trying to engineer my decisions. Without that aspect, I’d enjoy it as much as if you threw me a stick and told me to go dowsing.

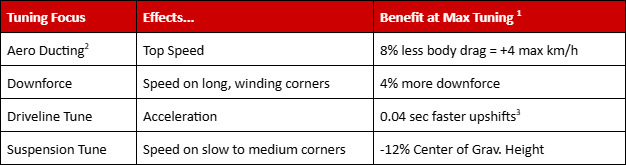

Part of the gameplay rules includes setup choices for each round, comprised of the following:

The cars and data are all readily accessible, so we can see how the cars start off and get some attributes to model. I refuse to actually test the cars in game, because that sort of defeats the point of the the offline management aspect if we can run our own testing whenever we want. Anything out of the game is fair game to me though, hence my worship of spreadsheets.

For this entry, I’m going to focus on the straight line maths and the assumptions I made, and why that totally misled me on setup. There’s two setup choices here, Air Ducts and Driveline. The former reduces drag, and the latter improves gear change time. At the extremes there’s a ~5% total car drag reduction based on my ISIMotor aero calculator (roughly, at least. I can’t control ride heights), and a 0.04 second reduction in gear change time.

And disclaimer: These are attempts to find trends and get an idea of the sort of magnitudes of improvements, not hard evidence or specific measurements.

A bad assumption:

I started off by getting an estimate of car behaviour around a lap using OptimumLap. It’s free, I could mock up an estimate of the car, and I just needed a rough idea of the amount of upshifts per lap, the average speed, and the throttle percentage. Outright accuracy wasn’t important. With those numbers I could begin estimating:

Drag:

Power consumed by drag came from average speed in the lowest and highest drag configuration.

Power required = drag * speed^3From there, this was converted into energy gained over a total lap by taking the time on throttle.

Energy = Power required * time on throttleGear change speed:

The extra energy per lap was decided:

Energy = no. of upshifts * shift time * average powerWhere average power was taken by averaging the power made over a range of rpm. This came to two orders of magnitude lower than the improvement in drag (<1 vs >10). It seemed like a no brainer to write off quicker shift times.

The Rethink:

I am the arrogant sort of type to think I’ve found an unfair advantage and insist on that to a worrying degree. So I had to politely eat my words when presented with some hard data:

baseline @ suzuka no changes bestlap: 1:50.716 stint : 48:08.572with geartune 0.16s -> 0.06s shift time bestlap: 1:49.807 stint : 47:46.223with geartune (less) 0.16s -> 0.11s shift time bestlap: 1:50.250 stint : 47:54.515

(The stints are 26 laps. A stint made up of perfect laps for the baseline was 0.345% faster, while for the 0.06s shift time improvement was 0.39% faster, and the 0.11s shift time was 0.28% faster)

Half a second lap time saved even at the smallest improvement. This is a very apples-to-oranges comparison because we’re comparing lap time to energy, and different improvements in shift time. But also by the fact there hasn’t been absurd differences in lap time between cars in the campaign to reflect an order of magnitude difference between setup choices, my gut feeling is that my original method was very far off the mark.

It seems obvious to me, even as I was running the numbers, that using average speed was not going to be accurate because drag is exponential. But because the result was an order of magnitude greater and drag is always an issue whereas a quicker shift time only applies during a gear change, I felt it was an acceptable simplification because it proved a point (namely, my gut assumption).

The New Plan:

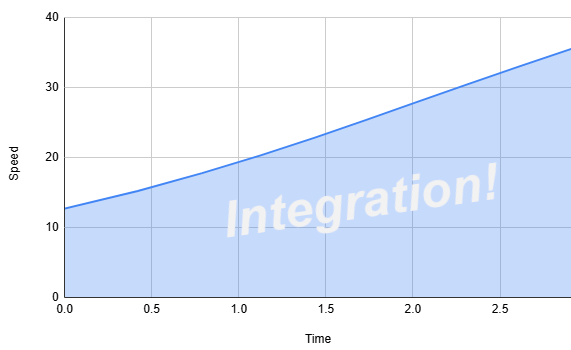

Taking the torque curve, calculating speed at each RPM, and then getting a rough idea of the time to accelerate through each gear, including drag. This was more a case of sanity checking than getting every number right. So the output is not immensely accurate, but at least I could whip it up while still in bed using data I had immediate access to. For the gear change, I held the car at the speed of the top of the gear for the duration of the change.

And a better way to compare came through this. Taking speed and time produces a distance travelled to accelerate. The speed range chosen was 68mph (~110km/h) to 106mph (~171km/h). I just picked two ratios that lined up, because I’m choosing to be lazy and neglect specifics that don’t change the comparison I’m trying to make.

Anyway:

68mph to 106mph, one gearchange:

Stock, worst of everything setup: 126.5m

Quicker shifts: 125.1m (-1.4m / 1.14% improvement)

Lower drag: 125.8m (-0.7m / 0.59% improvement)

Obviously this is a sample size of one, without many details considered, and an extremely coarse integration. Take the specifics with a grain of salt.

The Takeaways:

Even at fairly significant speeds, the benefit of lower drag is minimal. As I was playing about with different gear ratios, comparing low and high drag accelerating across varying speed ranges always created pretty tiny differences unless the higher drag car was near V-max. This feels naive to say without experimenting more, but it appears that chasing lower drag seems largely futile if you've got any significant excess of power. But it does make a huge difference at the cusp of top speed. Besides that, I’m not taking rolling resistance into account, which would reduce the overall percentage effect drag has on the car (right now, it's the only effect). On one hand, that means lowering drag is lowering the total resistance by a smaller amount, but on the other, it means there's less useful power available. Something to ponder and play with in future.

Improving gear change speeds had a much bigger improvement than expected. And if I indulge in conjecture, faster shifts mean less time decelerating from resistance during the shift, so you’d start each new gear at a higher speed, which should become bigger and bigger gaps all the way down every straight. Again, doing an immensely basic estimation:

Speed Lost = (Drag Force/mass) * shift speed

Fastest shift speed: 0.09mph (0.15km/h) lost

Lowest drag: 0.14mph (0.23km/h) lost

…I don’t know if a benefit that small is going to be felt.

The question is if a few metres is worth it and the answer is a solid, “Eh, Maybe?” Shorter distances favour the shorter shift times over lower drag, and 125m isn’t a lot of distance on a racetrac, so this experiment is sorta rigged in shift time’s favour. I could see shorter shift times being especially advantageous closing in on braking zones, getting one last upshift in without the chance to wring out a whole new gear.

At higher speeds, quicker shifts are going to create wider margins (as you're coasting over a larger difference). Makes me wonder if that’s a second order benefit to having longer gears, although I’d imagine any benefit from that would get drowned out by the resulting less optimised gearing.

The only hard truth I’ve established is that my old logic was flawed, and even a tiny improvement in shift time can be surprisingly powerful.